Have you ever come across a video in which Nicholas Cage with letdown long blonde hair was singing “I Will Survive” by Gloria Gaynor? Or, have you ever bumped into the Basic Instinct interrogatory scene that made cinema history? Except for the fact that in that case, you were probably looking in horror at Steve Buscemi traits over imposed to the face of the busty Catherine Thramell instead of Sharon Stone’s ones. Did it creep you out? Speaking in all honesty, it gave me nightmares for days. Now I have to admit that I’ll probably have a hard time watching Basic Instinct with the same eyes ever again.

Well, jokes aside, if it has ever happened to you most likely you have come across a deepfake and maybe you probably didn’t recognize it right on the spot. But what are deepfakes and why are they making headlines so much? How do they relate to social media and the concept of privacy and sensitive data protection? With the emerging and the trending of deepfakes all over the social media platforms and forums what will be exactly at stake for online users? What pressing issues and challenges do they entail?

Now let’s put aside for few seconds the nightmare-inducing vision of Steve Buscemi that sensually cross his legs with nonchalance during the questioning scene. Let’s try to depict a fictional but very likely scenario as the one that follows. Imagine that one day you are alerted by a friend that hardcore images (or videos) of you are circulating online. You are not an adult performer and you have never given your consent to share such images. You have never even shot them, so these are not even pictures that have been illegally shared without your consent. It is not even about revenge porn this time since you probably never record yourself or your partner during intimate and private moments. These images have simply never existed in reality. They are fake, artificially created, but they have your face as weird and alienating it might sound to you. And again, the overwhelmingly majority of them are of pornographic and explicit nature: who could have guessed, right?

The Deepfake is an artificial intelligence-based human image synthesis technique that started taking its first steps back in 2017 and now it is predominantly used to combine and superimpose existing images and videos with original videos or images via a machine learning technique known as generative adversarial network. More specifically Deepfakes make use of both the modern techniques of deep learning and lip-syncing. Deepfake is a fast-growing phenomenon, both qualitatively and quantitatively, to the point that it has become a new weapon in the arsenal of cyber warfare: with the purpose of damaging a company, a person’s reputation or the elections of a rival country, just to name a few. To back this statement up we have a Deeptrace’s report, released at the end of 2019, where it turns out that the number of deepfake videos available has skyrocketed since December 2018 and now is standing at 14,678 (data of September 2019). Again, 96% of them are pornographic in nature and content and therefore are mostly suitable for malicious and mischievous purposes.

The growing popularity of deepfakes has led to the development of software, often open source, based on algorithms capable of generating fake multimedia content, with more accessible methods than those required by the most advanced GANs. Well known is the case of FakeApp, an application developed and spread by the group/deepfakes on Reddit, at some point banned by the admins of the popular social network because of the repercussions related to the increasing spreading of pornographic material. FakeApp opened a new era for deepfake enthusiasts, and soon other projects were started, often supported by university research groups, such as DeepFaceLabs and FaceSwap for instance. Thanks to these encoders, some nice tutorials, and a few hours of study, even a layman can create and spread a deepfake on the net, even without having the slightest idea or clue about programming or coding.

But what is the ultimate danger? This phenomenon if it was to get more and more ground in the next years could lead to the creation of zero-trust societies in which overly-skeptical people could doubt everything they see or hear, even what they witness with their own eyes. Just think of the effects of deepfake on environmental audio eavesdropping used in criminal justice, which would become virtually unusable. The possible scenario offered today by artificial intelligence seems more infinite than ever. While there is a deep desire for progress, there is also the fear that all this technology could slip out of our hands. In the intentions of those who create deepfakes sometimes there are also politics driven motives. The goals, in this case, would range from steering public opinion and confusing it to finally increasing distrust in institutions and traditional information sources and media outlets. This new technology is therefore indeed packed of ethical questions and uncertain future applications.

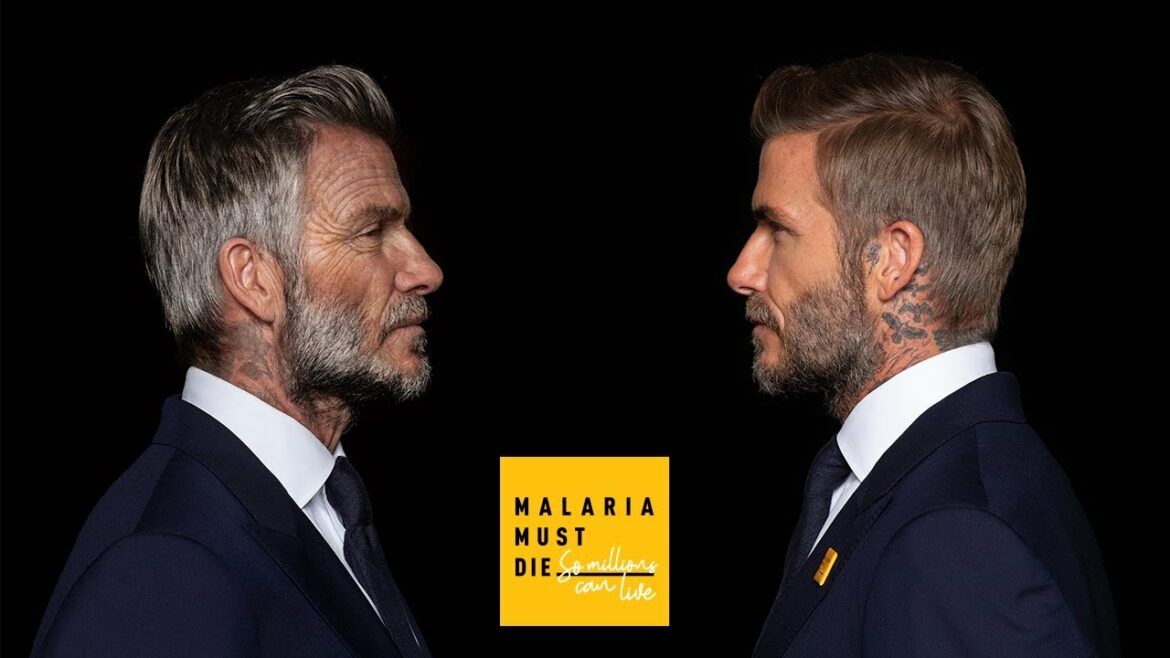

But as there is always another side of every story, we shouldn’t limit ourselves to think only about the malicious use of Deepfakes. I recognize such a concept it’s hard to grasp but take, for instance, the David Beckham announcement done to raise awareness about the “Malaria Must Die” worldwide campaign back in 2020 winter. By using this kind of technology, the former football champion was shown speaking nine different languages in order to share a socially important and powerful message. So ideally speaking, deepfakes could help shatter language barriers, making content, especially socially relevant ones, more accessible. The technology behind deepfakes can also provide benefits to the healthcare industry.

For instance, it could help enhancing patients data protection against frauds, while helping with the development of new diagnosis and monitoring practices. Hospitals could for example create deepfake realistic patients for testing and experimentation, without putting actual people safety and health at risk. From this, there would room and possibilities to test new methods of diagnosis and monitoring. So, when it comes to AI and Deepfake the best approach would be a cautious and possibility trust instead of just an unconditional fear.